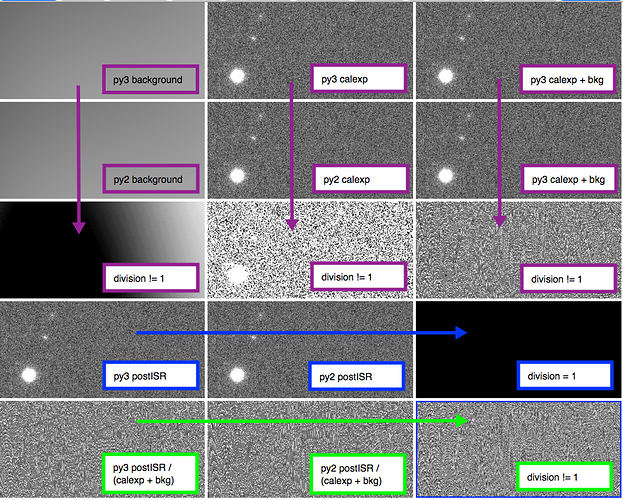

The background model being used by pipe_tasks (I believe this is the job of meas_algorithms) apparently has gradients that are being quantified differently in python2 vs python3. The following series of images illustrates the problem and shows that the only difference is present in the background, likely due to different floating point precision.

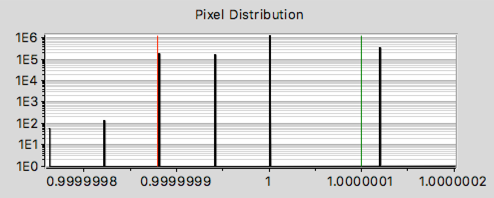

The (row3, col3) and (row5, col3) images above have pixel value distributions that are essentially identical:

All the pixels from both

(py3_calexp + py3_bkg) / (py2_calexp + py2_bkg) and

[py3_postISR/(py3_calexp + py3_bkg)] / [py2_postISR/(py2_calexp + py2_bkg)]

are very close to 1, but differ in discrete amounts by up to ± 0.0000002. There is a discrepancy in how the background gradient is discretized between py2 and py3.

Therefore, I plan to submit DM-7292 for review with only minor formatting changes and new comments in testProcessCcd.py explaining why the thresholds (initially discussed here) are presently larger than they should be in order for the test to pass. I will open new tickets to identify and fix the underlying floating point background problem in meas_algorithms and write a test to ensure this doesn’t happen again. See: DM-8017.