Hello,

I am working for Euclid and have the responsability of the lsst data processing within the Euclid infrastruture.

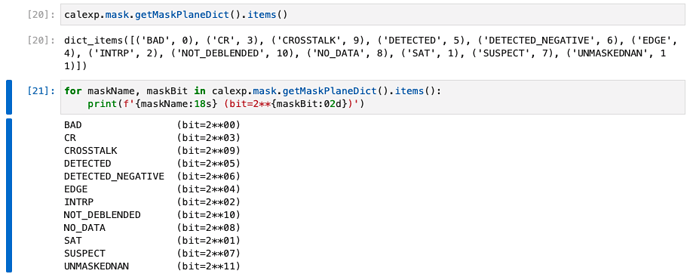

I was asked about the bit flags used in LSST, and saw that you are refering to Mask Planes Number whereas Euclid uses Bit Mask Value in their documentation.

I guessed that the correspondance between the two was as follow :

BIT_PLANE FLAGMAP_BIT BIT_VALUE

0 0x00000001 1 BAD

1 0x00000002 2 SAT

2 0x00000004 4 INTRP

3 0x00000008 8 CR

4 0x00000010 16 EDGE

5 0x00000020 32 DETECTED

6 0x00000040 64 DETECTED_NEGATIVE

7 0x00000080 128 SUSPECT

Can someone tell me if this is correct ?

Thank you for your help.

Rémi