Hi!

Thank you for your advice, it let me know the error was related to the rawformatter. With the direction you pointed I read the rawformatter of DECam and HSC, I knew that we should use the class like lsst.afw.image.ImageF to control the type we used to read the data.

And I have some questions about rawformatter. This is the class of DECam, it will not try to read the “extension 0”, it only read the extension related to that CCDs.

class DarkEnergyCameraRawFormatter(FitsRawFormatterBase):

translatorClass = astro_metadata_translator.DecamTranslator

filterDefinitions = DarkEnergyCamera.filterDefinitions

# DECam has a coordinate system flipped on X with respect to our

# VisitInfo definition of the field angle orientation.

# We have to specify the flip explicitly until DM-20746 is implemented.

wcsFlipX = True

def getDetector(self, id):

return DarkEnergyCamera().getCamera()[id]

def _scanHdus(self, filename, detectorId):

"""Scan through a file for the HDU containing data from one detector.

Parameters

----------

filename : `str`

The file to search through.

detectorId : `int`

The detector id to search for.

Returns

-------

index : `int`

The index of the HDU with the requested data.

metadata: `lsst.daf.base.PropertyList`

The metadata read from the header for that detector id.

Raises

------

ValueError

Raised if detectorId is not found in any of the file HDUs

"""

log = logging.getLogger("DarkEnergyCameraRawFormatter")

log.debug("Did not find detector=%s at expected HDU=%s in %s: scanning through all HDUs.",

detectorId, detector_to_hdu[detectorId], filename)

fitsData = lsst.afw.fits.Fits(filename, 'r')

# NOTE: The primary header (HDU=0) does not contain detector data.

for i in range(1, fitsData.countHdus()):

fitsData.setHdu(i)

metadata = fitsData.readMetadata()

if metadata['CCDNUM'] == detectorId:

return i, metadata

else:

raise ValueError(f"Did not find detectorId={detectorId} as CCDNUM in any HDU of {filename}.")

def _determineHDU(self, detectorId):

"""Determine the correct HDU number for a given detector id.

Parameters

----------

detectorId : `int`

The detector id to search for.

Returns

-------

index : `int`

The index of the HDU with the requested data.

metadata : `lsst.daf.base.PropertyList`

The metadata read from the header for that detector id.

Raises

------

ValueError

Raised if detectorId is not found in any of the file HDUs

"""

filename = self.fileDescriptor.location.path

try:

index = detector_to_hdu[detectorId]

metadata = lsst.afw.fits.readMetadata(filename, index)

if metadata['CCDNUM'] != detectorId:

# the detector->HDU mapping is different in this file: try scanning

return self._scanHdus(filename, detectorId)

else:

fitsData = lsst.afw.fits.Fits(filename, 'r')

fitsData.setHdu(index)

return index, metadata

except lsst.afw.fits.FitsError:

# if the file doesn't contain all the HDUs of "normal" files, try scanning

return self._scanHdus(filename, detectorId)

def readMetadata(self):

index, metadata = self._determineHDU(self.dataId['detector'])

astro_metadata_translator.fix_header(metadata)

return metadata

def readImage(self):

index, metadata = self._determineHDU(self.dataId['detector'])

return lsst.afw.image.ImageI(self.fileDescriptor.location.path, index)

But if I mimic the behavior of ‘DECam’, like(Here I only read the extension 1 by fitsData.setHdu(1). And the structure of ZTF’ fits I used is: every fits have 2 extensions, extension 0 for primary hdu, and extension 1 related to the image come from a single amplifier.

class ZTFCameraRawFormatter(FitsRawFormatterBase):

wcsFlipX = True

translatorClass = ZTFTranslator

filterDefinitions = ZTF_FILTER_DEFINTIONS

def getDetector(self, id):

return ZTFCamera().getCamera()[id]

def readMetadata(self):

fitsData = lsst.afw.fits.Fits(self.fileDescriptor.location.path, 'r')

fitsData.setHdu(1)

metadata = fitsData.readMetadata()

fix_header(metadata)

return metadata

def readImage(self):

return lsst.afw.image.ImageF(self.fileDescriptor.location.path, 1)

And then use the pipetask run to process the data ingested successfully, It will have a lot of warning like:

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'altaz_begin' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132/raw_ZTF_ZTF_r_ztf_20210804364132_43_ZTF_raw_all.fits: 'DATE-OBS not found'

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'observation_type' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132/raw_ZTF_ZTF_r_ztf_20210804364132_43_ZTF_raw_all.fits: 'IMGTYPE not found'

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'dark_time' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132/raw_ZTF_ZTF_r_ztf_20210804364132_43_ZTF_raw_all.fits: "Could not find ['EXPTIME'] in header"

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'datetime_end' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132/raw_ZTF_ZTF_r_ztf_20210804364132_43_ZTF_raw_all.fits: "Could not find ['EXPTIME'] in header"

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'detector_exposure_id' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132/raw_ZTF_ZTF_r_ztf_20210804364132_43_ZTF_raw_all.fits: 'FILENAME not found'

But if I can ingest the data, why this warning happen?

Only normal if I modify the hdu to “0”.

def readMetadata(self):

fitsData = lsst.afw.fits.Fits(self.fileDescriptor.location.path, 'r')

fitsData.setHdu(0)

metadata = fitsData.readMetadata()

fix_header(metadata)

return metadata

And the result is

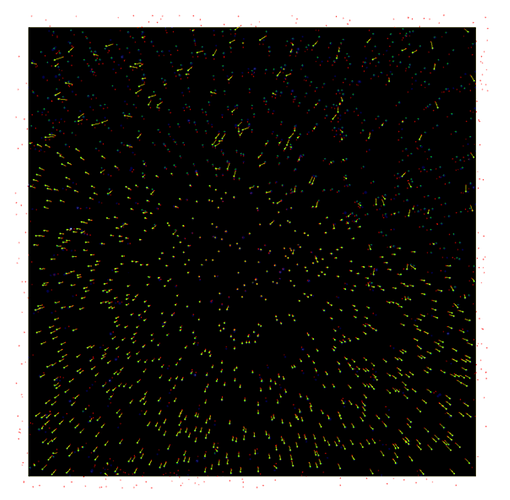

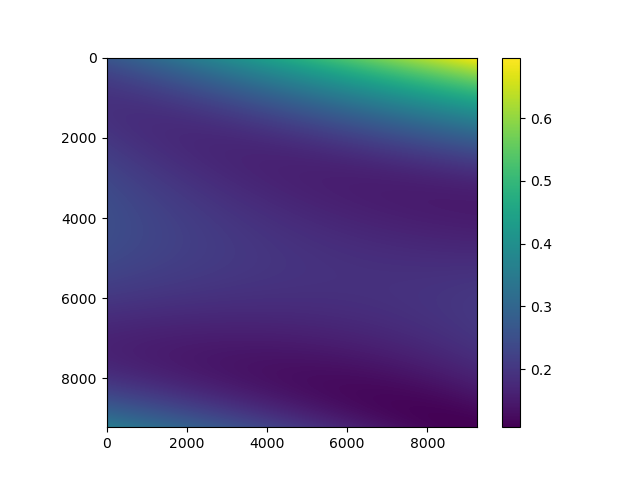

characterizeImage.detection INFO: Detected 6441 positive peaks in 2287 footprints and 0 negative peaks in 0 footprints to 100 sigma

characterizeImage.detection INFO: Resubtracting the background after object detection

characterizeImage.measurement INFO: Measuring 2287 sources (2287 parents, 0 children)

characterizeImage.measurePsf INFO: Measuring PSF

characterizeImage.measurePsf INFO: PSF star selector found 3 candidates

characterizeImage.measurePsf.reserve INFO: Reserved 0/3 sources

characterizeImage.measurePsf INFO: Sending 3 candidates to PSF determiner

characterizeImage.measurePsf.psfDeterminer WARNING: NOT scaling kernelSize by stellar quadrupole moment, but using absolute value

> WARNING: 1st context group-degree lowered (not enough samples)

> WARNING: 1st context group removed (not enough samples)

characterizeImage.measurePsf INFO: PSF determination using 3/3 stars.

py.warnings WARNING: /home/yu/lsst_stack/23.0.1/stack/miniconda3-py38_4.9.2-0.8.1/Linux64/pipe_tasks/gf1799c5b72+6048f86b6d/python/lsst/pipe/tasks/characterizeImage.py:495: FutureWarning: Default position argument overload is deprecated and will be removed in version 24.0. Please explicitly specify a position.

psfSigma = psf.computeShape().getDeterminantRadius()

ctrl.mpexec.singleQuantumExecutor ERROR: Execution of task 'characterizeImage' on quantum {instrument: 'ZTF', detector: 43, visit: 20210804, ...} failed. Exception InvalidParameterError:

File "src/PsfexPsf.cc", line 233, in virtual std::shared_ptr<lsst::afw::image::Image<double> > lsst::meas::extensions::psfex::PsfexPsf::_doComputeImage(const Point2D&, const lsst::afw::image::Color&, const Point2D&) const

Only spatial variation (ndim == 2) is supported; saw 0 {0}

lsst::pex::exceptions::InvalidParameterError: 'Only spatial variation (ndim == 2) is supported; saw 0'

At least, it begins to work, I will read the task’config class to figure out the above error.

My question is, why these warnings happen?

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'dark_time' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132/raw_ZTF_ZTF_r_ztf_20210804364132_43_ZTF_raw_all.fits: "Could not find ['EXPTIME'] in header"

astro_metadata_translator.observationInfo WARNING: Ignoring Error calculating property 'datetime_end' using translator <class 'astro_metadata_translator.translators.ztf.ZTFTranslator'> and file /home/yu/wfst_data/ztf_try/ZTF/raw/all/raw/20210804/ztf_20210804364132

the exptime indeed only exists in extension 0 and not in extension 1 for ZTF.

But why DECam only read the extension ralated to that CCDs(I didn’t find it read the extension 0 and the structure of DECam is extension 0 for the primary header and tens of extensions for image, and there are lots of header key that used in DECamTranslator only exist in 0 extension ) but without the warning like ZTF? for example:

DECam_raw[0].header['EXPTIME']

30

DECam_raw[1].header['EXPTIME']

Input In [75], in <cell line: 1>()

----> 1 DECam_raw[1].header['EXPTIME']

KeyError: "Keyword 'EXPTIME' not found."

It may related to how the rawformatted I have not know. Looks like it will be convenient if we set the fits like HSC( one fits one extension)

Thank you!