Hi everyone ![]()

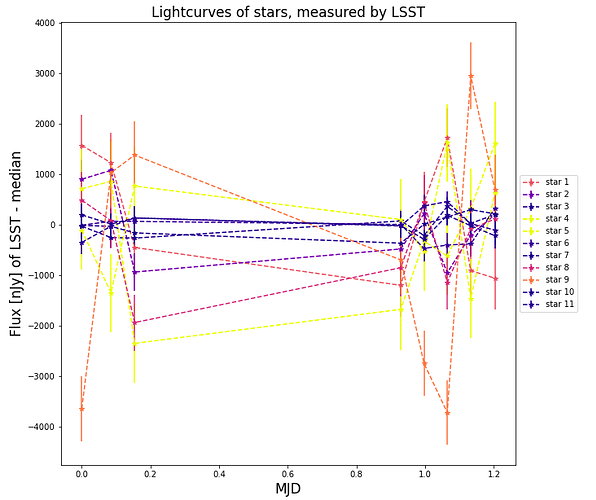

I’m currently analyzing the light curves I obtained by performing Image Differencing on DECam images. Right now, I want to ensure that non-variable stars show a flat light curve for all epochs, but for now, that doesn’t seem to be the case.

The stars I choose to plot come from a crossmatch between the src and the diasrc catalog of a given exposure, I select the ones that have 'src_calib_photometry_used' == True and 'ip_diffim_forced_PsfFlux_instFlux' is null. Because each image/observation uses a slightly different set of stars for the calibration, I only focus on the stars that are used for all epochs (and satisfy the constraints mentioned).

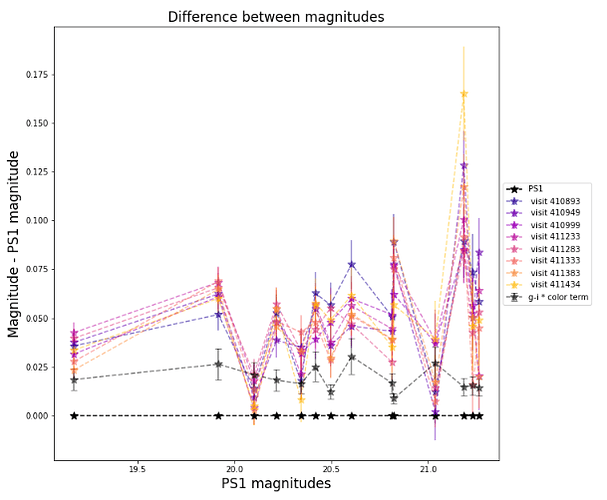

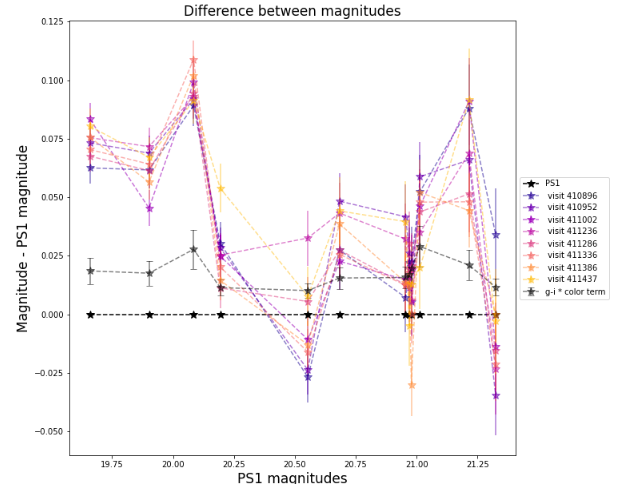

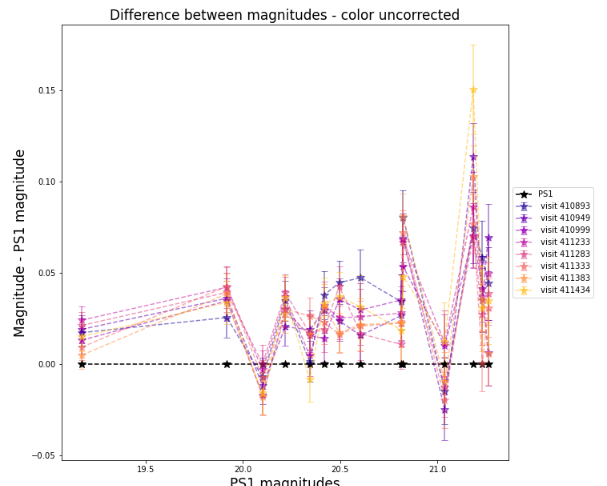

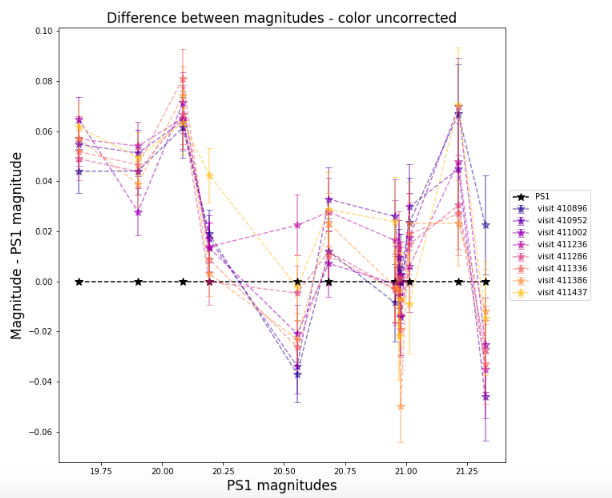

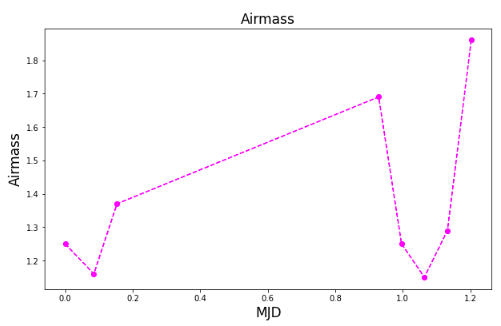

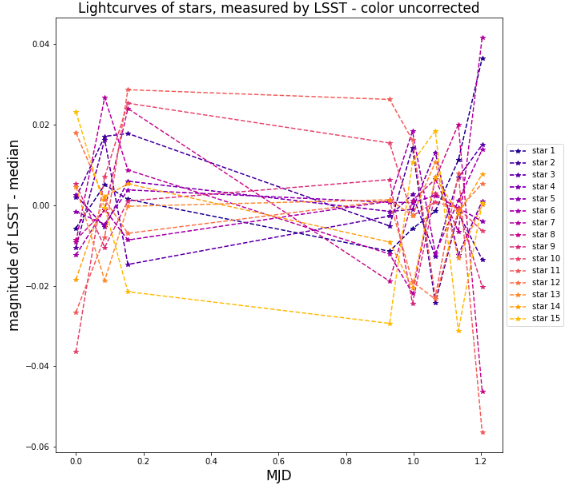

Here I show some plots, of course all of these fluxes and magnitudes are calibrated by the corresponding Photocalib method of the science image.

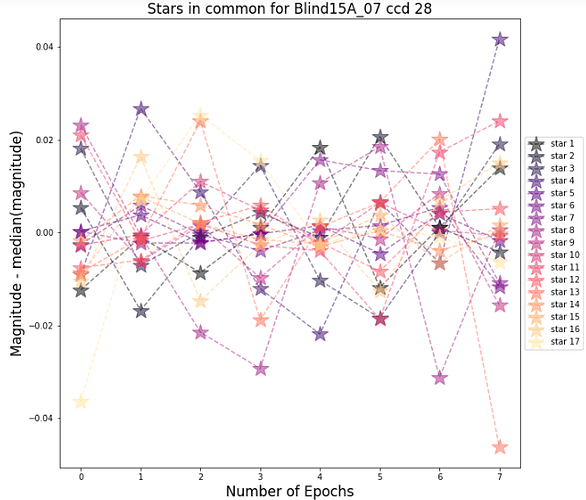

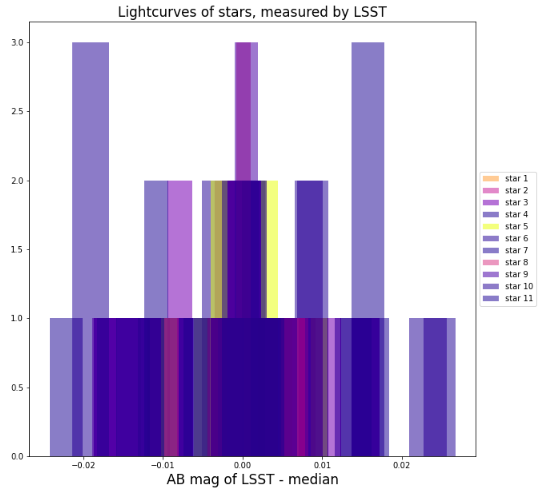

Here are the magnitude of the stars subtracted by their median, for every epoch (base_PsfFlux_mag). This value of magnitudes are obtained by calibrating the src catalog using the .calibrateCatalog method from PhotoCalib

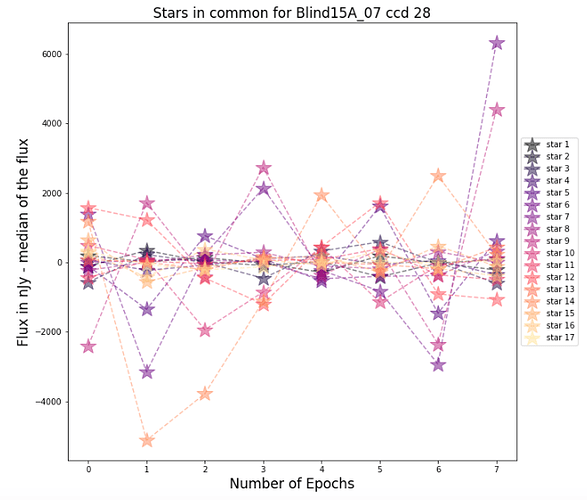

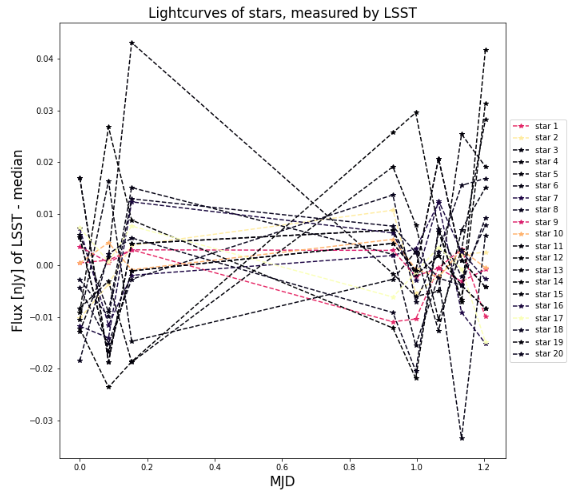

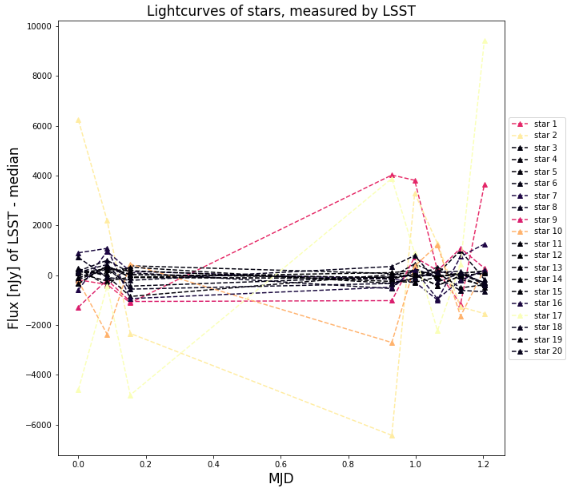

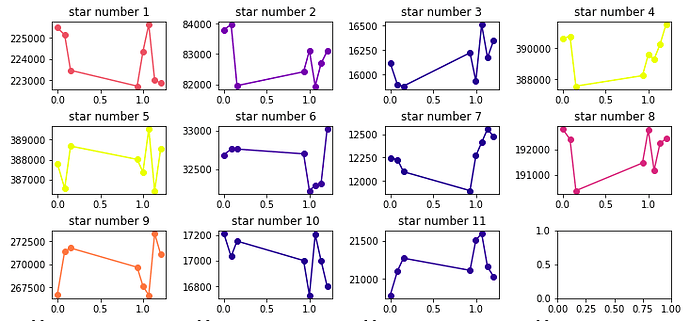

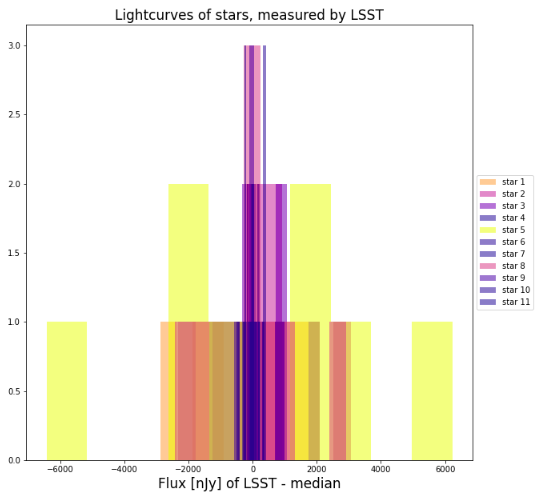

Here are the fluxes in nJy of the stars for every epoch, I calculated it by multiplying the src column of base_PsfFlux_mag with the Calibration Mean of the corresponding exposure:

The variability of the magnitude of the stars are quite large, with a dispersion that gets to 0.04 magnitudes, I would like for these curves to be flatter, but I’m not sure how I could proceed or if its possivle. I wonder if the problem is that I should be looking at the set of stars used individually for each exposure? Instead of this smaller sample that’s not that flat, but I also think that eitherway they should look constant.

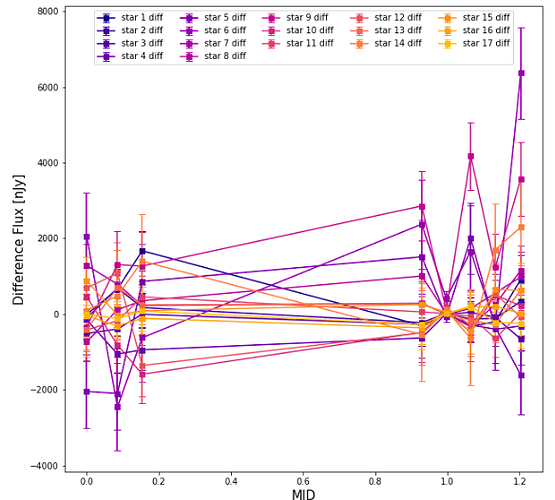

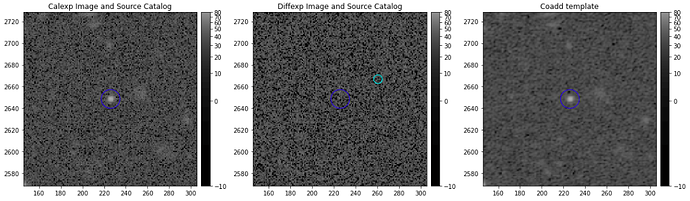

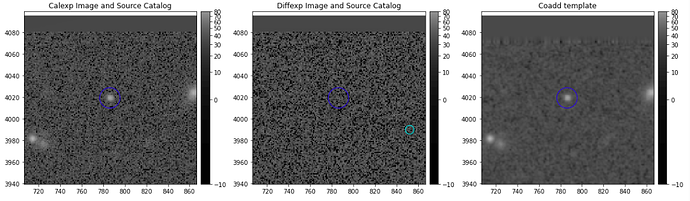

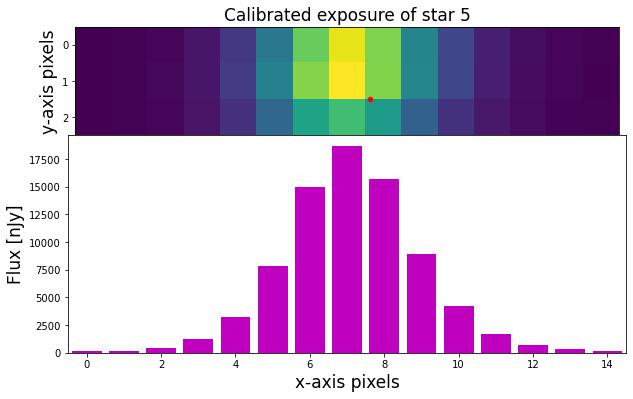

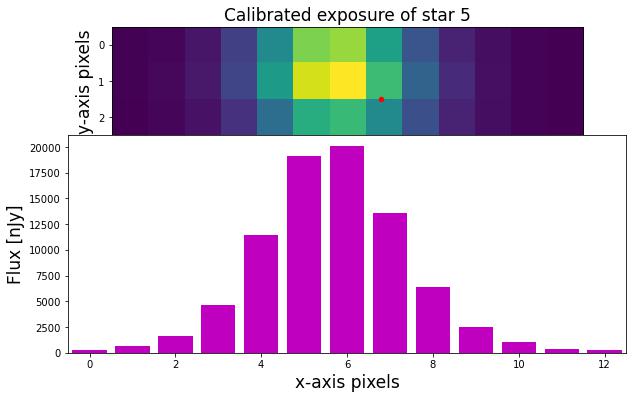

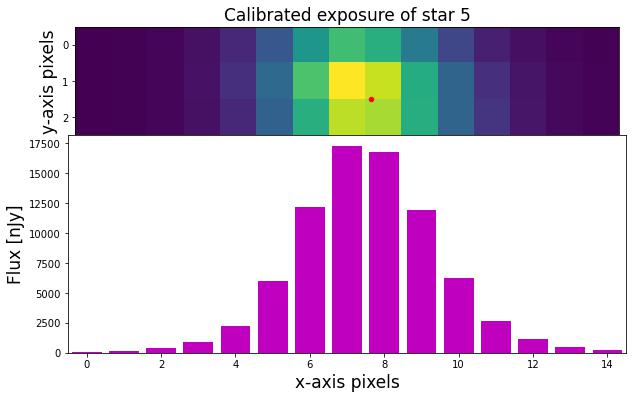

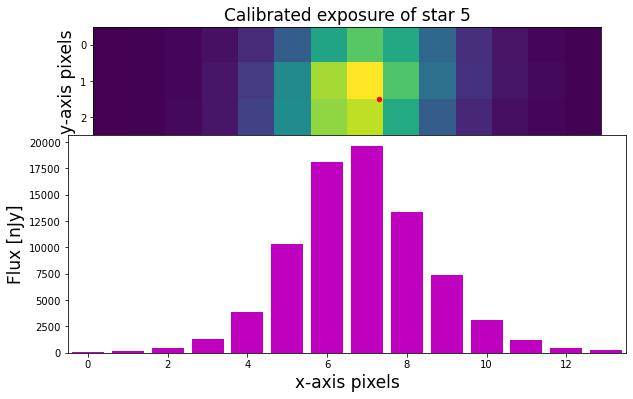

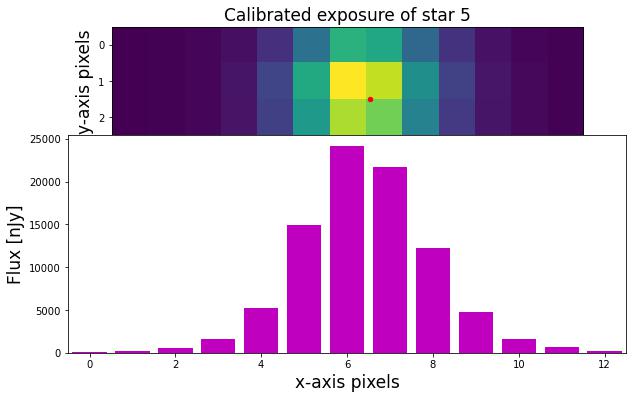

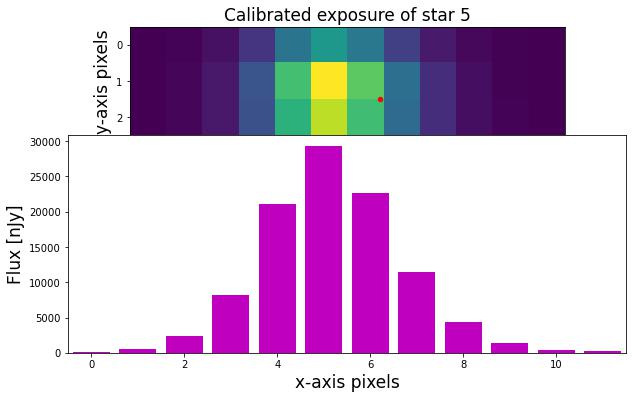

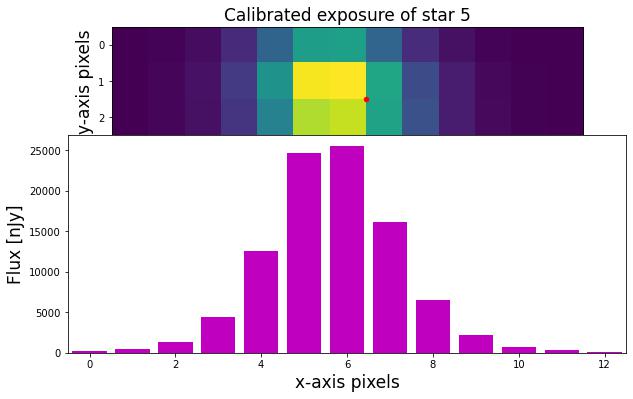

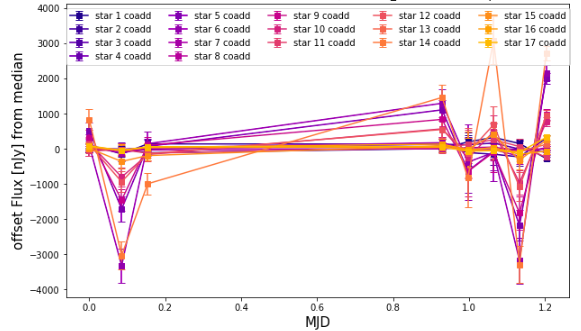

I also go ahead and measure the flux of the stars on the coadd exposure ('goodSeeingDiff_matchedExp') that is warped and psf-matched to the science image. Here are some plots:

The aperture I use is 2.5 times the PSF of the given exposure:

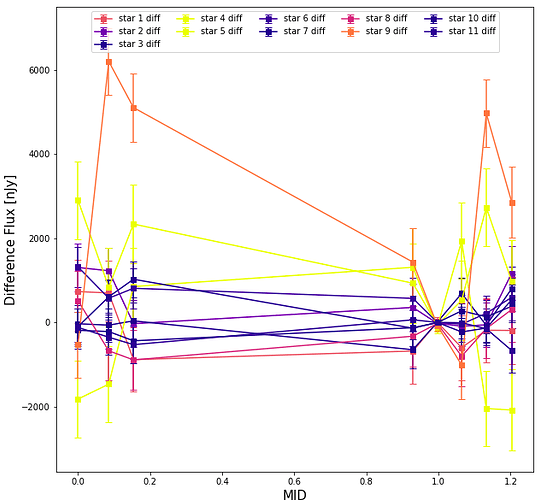

And here are the lightcurves of the stars on the difference image ('goodSeeingDiff_differenceExp') :

Also, lots of dispersion and tendencies.

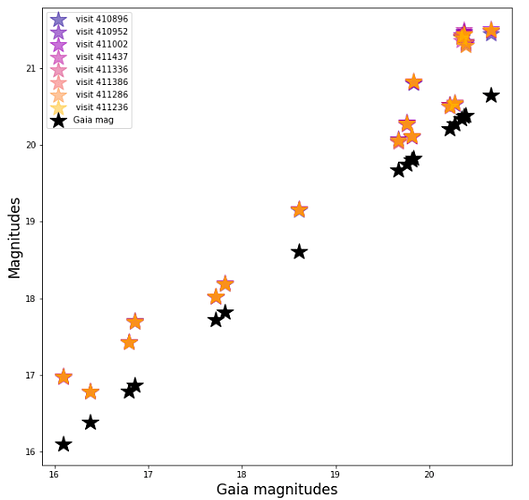

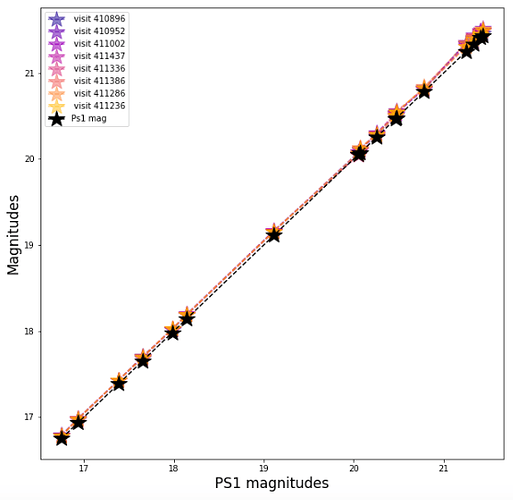

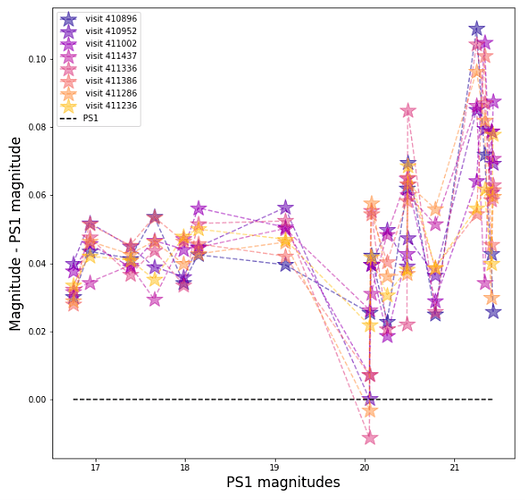

Another trial, and the last one is that I compare the magnitudes in GAIA with the magnitudes that are retrieved by the .CalibrateCatalog method on the src catalog:

The black star shows Gaia Magnitudes vs Gaia Magnitudes, and the other points are the stars found for each exposure. (the Gaia magnitudes are retrieved with Vizier query)

Happy to hear any comments about this!

Thanks ![]()