DART: Detection of Anomalies in Real Time

We’re excited to announce the new AnomalyDetection annotator on Lasair, which provides both anomaly score and classification results! The annotator uses the classifier-based anomaly detection method presented in Gupta, Muthukrishna, and Lochner. and transfer learning methods described in Gupta, Muthukrishna, Rehemtulla, and Shah. Our anomaly detection pipeline can identify rare classes including kilonovae, pair-instability supernovae, and intermediate luminosity transients relatively quickly for Zwicky Transient Facility light curves, and will soon be extended to the LSST survey of the Vera C. Rubin Observatory.

Part 1: Classification

Our classifier is a Recurrent Neural Network (RNN) which accepts light curves as vectors of shape N x 4, where N is the number of timesteps. Each row of the input vector represents an observation, and contains the median passband wavelength, scaled time since first observation, scaled flux, and scaled flux error. This input method allows us to naturally handle variable length time-series without interpolation.

Our classifier is trained to classify three classes (SN Ia, SN II, and SN Ibc). We gathered training data directly from Lasair by crossmatching spectroscopically classified supernovae from TNS (built-in Lasair functionality!).

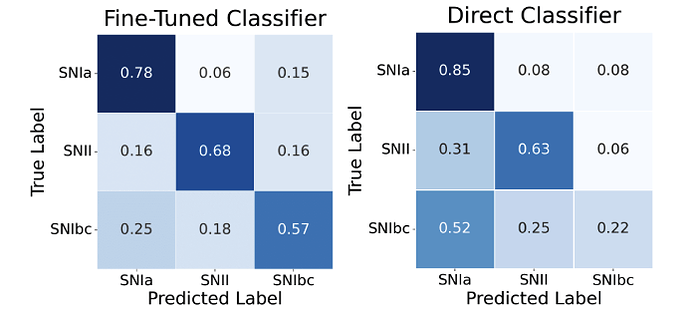

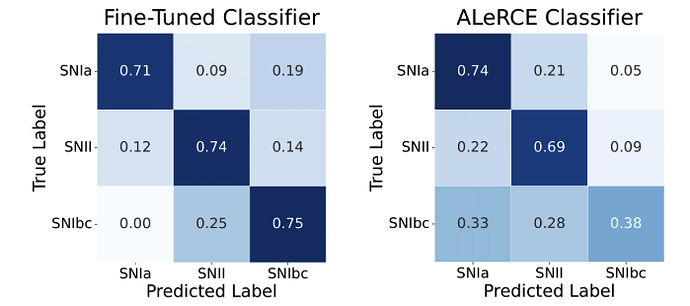

However, before fine-tuning on this dataset, we pretrain our model on classifying 13 classes of simulations. When compared to a classifier trained without this pretraining, we see significant improvements, especially on SN Ibc supernovae, for which we have a lot less data. This is the key finding of our paper on Transfer Learning.

We also compared our deep learning-based classification approach to previous approaches implemented on Lasair, specifically ALeRCE’s classifier (which uses a simple but effective non-deep learning approach). ALeRCE’s classifier has an additional class (TDEs), however we ignore the outputs for TDEs in our comparison. In any case, take the following comparison with a grain of salt.

Also note that the Fine-Tuned Classifier figure here is different from the one above as ALeRCE’s classifier has not been run on all objects used to create the figure above.

We see significantly improved performance, especially in the SNIbc class. Deep learning-based pretraining methods show promise in improving classification performance for real data.

Part 2: Anomaly Detection

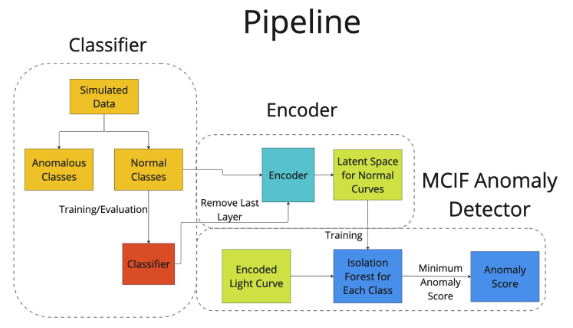

[Figure taken from our paper]

Our technique for anomaly detection is to remove the classification layer of a classifier, and repurpose the rest of the model as an encoder for anomaly detection. This penultimate layer should have clustering of similar classes.

Once we have this latent space, we could use an isolation forest to extract anomalies. However, using a single isolation forest tends to focus heavily on SN Ia, resulting in lots of SN II and SN Ibc false positives. Thus, we train a separate isolation forest for each class, and report the minimum anomaly score across all detectors as the final anomaly score. We call this method Multi-Class Isolation Forests (MCIF).

While MCIF does help, the advantages are not as big on real data where there are fewer classes. On simulations, performance increases significantly on simulations (see Appendix A of Gupta, Muthukrishna, and Lochner).

To evaluate anomaly detection, we use pretend spectroscopically classified TDEs and SLSNe are anomalies. We can build classifiers directly for these models, however adding them to our set of “normal” classes would leave no way to evaluate anomaly detection (See section 3.4 of above).

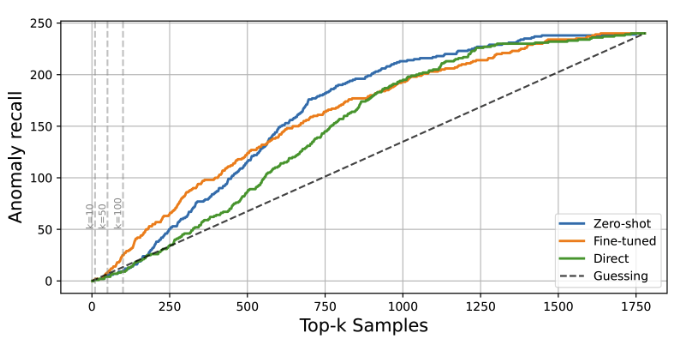

We also realize that most astronomers will likely look at the top-k anomaly scores, so it’s important that those samples have a high purity of anomalies. The following plot shows the anomaly purity of using three different encoders. Direct training and fine-tuning are classifiers trained with the respective method (explained earlier). Zero-shot uses the model pretrained on simulations directly (with no fine-tuning).

As seen, pretraining significantly increases the anomaly purity. We hope that future anomaly detection approaches improve this even further.

We hope people find both the classifier and anomaly detector useful (which will start running regularly soon). I’d love to answer questions or talk about this annotator, email me at rithwikca2020@gmail.com. I’m looking forward to seeing this annotator used!

Thanks to the Lasair Team, especially Roy, for helping make a lot of the implementation on Lasair possible.