This may be the wrong place to raise this issue.

I am attempting to use code extracted from a Noirlab notebook to build a new notebook that would access astrometry data for processing.

The Noirlab code uses Data Lab (dl) methods and I attempted to pip install dl. I get a message “Defaulting to user installation because normal site-packages is not writeable” that prevents the install.

Should I be putting this issue into GitHub?

That message is telling you it is trying to install it locally. It’s not telling you it failed.

Thanks for the quick response. After the pip install, I try to import the methods associated with dl, but the response is:

"—> 15 from dl import authClient as ac, queryClient as qc

17 # Get helpers for various convenience function

18 from dl.helpers.utils import convert

ModuleNotFoundError: No module named ‘dl’ "

It was this message that caused me to believe that the install had failed. I also looked for a dl folder in the python site-packages folder and couldn’t find anything.

What did the pip install say after the warning?

-

To be clear, are you using

pip install --user? -

Also are you sure there is a pypi package called dl that is the one you want? Have you tried it from eg. your own laptop and it works? I may be blind but dl on pypi seems to be this: GitHub - mrdanbrooks/dl: dl - rm with forgivness which is not what you are looking for

Have a look at the Imports and Setup section of this notebook: notebooks-latest/01_GettingStartedWithDataLab/02_GettingStartedWithDataLab.ipynb at master · astro-datalab/notebooks-latest · GitHub

There is no install in this code, but the import from dl is there and it must work in the Noirlab environment.

Modern pip (very recent it seems) is clever enough to not need --user. The warning message in the initial report was pip saying that it couldn’t install into the read-only location so is falling back to user install. That isn’t the error. What I don’t know is what pip said after that warning.

(and yes, pip install dl will try to install dl · PyPI)

I confirmed that the code works fine if I sign into the Noirlab notebook environment. My objective is to bring access to GAIA data into the Rubin notebook environment. Maybe I am barking up the wrong tree. If you wanted to do this, i.e. have GAIA and Rubin data available in the same working environment, do you have any suggestions?

I think that you wanted to know this:

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 15

10 get_ipython().run_line_magic(‘matplotlib’, ‘inline’)

12 # Data Lab and related imports

13

14 # You’ll need at least these for authenticating and for issuing database queries

—> 15 from dl import authClient as ac, queryClient as qc

17 # Get helpers for various convenience function

18 from dl.helpers.utils import convert

ModuleNotFoundError: No module named ‘dl’

Or, was it this:

Defaulting to user installation because normal site-packages is not writeable

Requirement already satisfied: dl in /home/sdcorle1/.local/lib/python3.11/site-packages (0.1.0)

Collecting argparse>=1.0 (from dl)

Using cached argparse-1.4.0-py2.py3-none-any.whl.metadata (2.8 kB)

Using cached argparse-1.4.0-py2.py3-none-any.whl (23 kB)

DEPRECATION: nb-black 1.0.7 has a non-standard dependency specifier black>=‘19.3’; python_version >= “3.6”. pip 24.0 will enforce this behaviour change. A possible replacement is to upgrade to a newer version of nb-black or contact the author to suggest that they release a version with a conforming dependency specifiers. Discussion can be found at Deprecate legacy versions and version specifiers · Issue #12063 · pypa/pip · GitHub

Installing collected packages: argparse

Successfully installed argparse-1.4.0

Note: you may need to restart the kernel to use updated packages.

Sorry, I was out of sync on the messaging with you. The pip install noaodatalab did the trick. Thanks for very much for your patience. Problem solved.

In the operations era, we will have a then-current Gaia data release directly available in the RSP on our own database and TAP servers, so that fast cross-queries can be done. For now…

I’m not sure whether DataLab offers value-added services for the Gaia data, but what I generally do is just use PyVO to do queries against the ESA Gaia TAP server, https://gea.esac.esa.int/tap-server/tap .

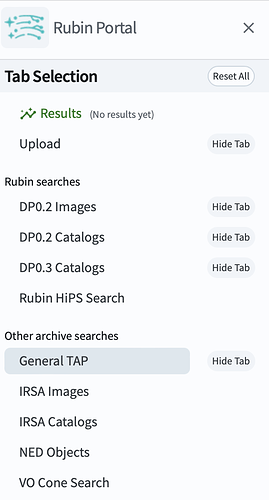

Note also that it’s easy to access Gaia from the RSP Portal Aspect (though you didn’t ask about that):

Select “General TAP” from the sidebar:

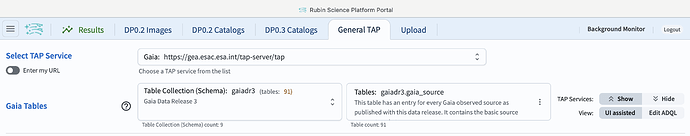

and then select Gaia on the resulting screen:

This will issue queries directly against the ESA TAP service.

@sdcorle1, If you want to explore the EAS Gaia TAP service option using PyVO in your NB following Gregory’s suggestion, here is a tutorial you can have a look, Sec. 4 is particularly relevant.

@gpdf @galaxyumi331 Thanks to both of you for these excellent suggestions. The news about the operational environment is great. From my early explorations, I am using the dl code to access the GAIA data. I’ll take a look to see what the TAP service can provide. TAP sounds like the better long term solution, especially when the GAIA data is available in the same environment.

I have another question that derives from having GAIA and LSST data in the same TAP environment. While looking around in the GAIA documentation, I discovered that they have created a spreadsheet that cross-references exoplanet candidates to GAIA source ID’s. The source ID’s then lead to parallax and proper motion parameters. I ran a query using IN to compare the GAIA source table to a small subset of the spreadsheet and with a WHERE parameter that looked for RA and DEC within the DC2 boundaries. This query resulted in one candidate exoplanet within the boundaries. (I’m not greedy so this one is enough for my current purpose. )

I took the RA and DEC from the candidate exoplanet to the Rubin environment and tried to match against LSST source records. I got four reasonable matches. I know that the LSST DP0.2 data is synthetic. Nonetheless, this brought up my new question.

When there is GAIA data in the operational LSST data environment, are you aware of any techniques that might be used to cross reference from one data source to the other?