This is a general question about flat-fielding that I’ve run into while processing HSC data. It may also be relevant to Rubin or other large-format imaging platforms.

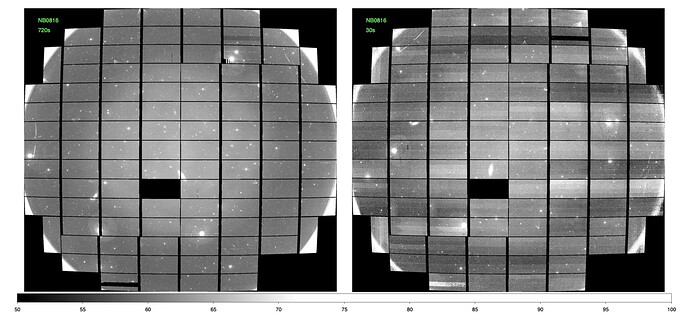

I’m trying to understand why I get different results when flat-fielding long and short exposures. The attached “minimal” example shows two narrow-band (NB0816) exposures, one 720s and the other 30s. Both were bias-subtracted and then divided by the same on-axis dome flat. I have not done any dark subtraction, correction for vignetting, etc.

In terms of continuity between CCDs, the long exposure looks pretty good. In contrast, there are significant discontinuities between CCDs in the short exposure. The jump between adjacent CCDs is up to ~5 counts, compared to ~60 counts in the sky. I note that groups of CCDs where the counts are too high or too low tend to line up in rows.

Does anyone know what might be causing this? It doesn’t seem like it’s obviously caused by bias residuals or fro currents. Linearity issues, perhaps?

Thanks in advance for whatever insights you may have!