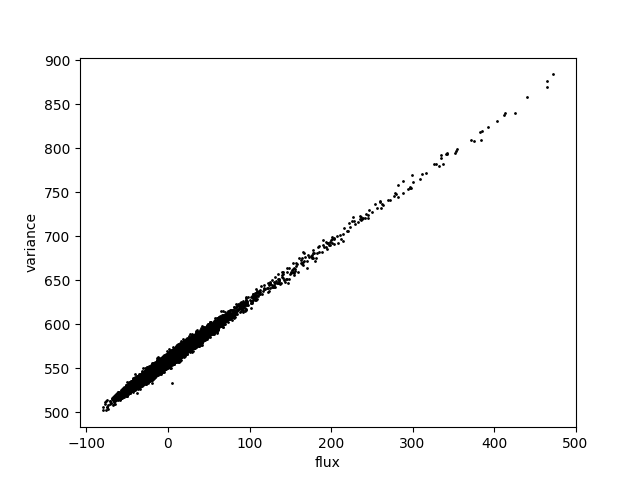

I am doing source injection on visit images in DP1 and I noticed a strange behaviour in the variance maps. Here I have a small cutout (128x128 pixels) before injection and I plot the variance vs the flux:

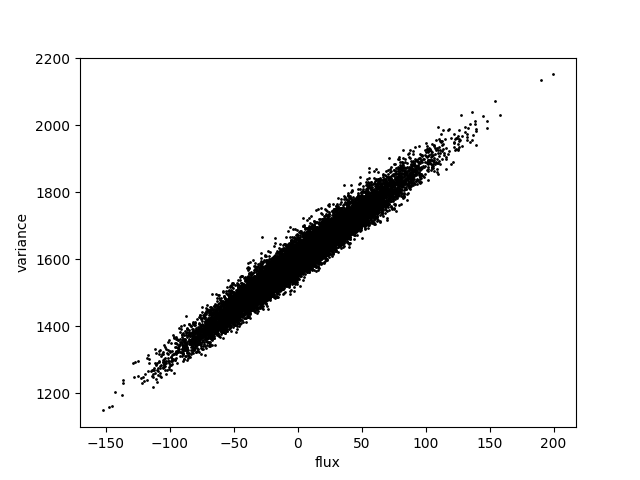

this looks like I expect, basically a linear relation with maybe some calibration/gain differences. However once I run the source injection pipeline task the sample plot looks like this:

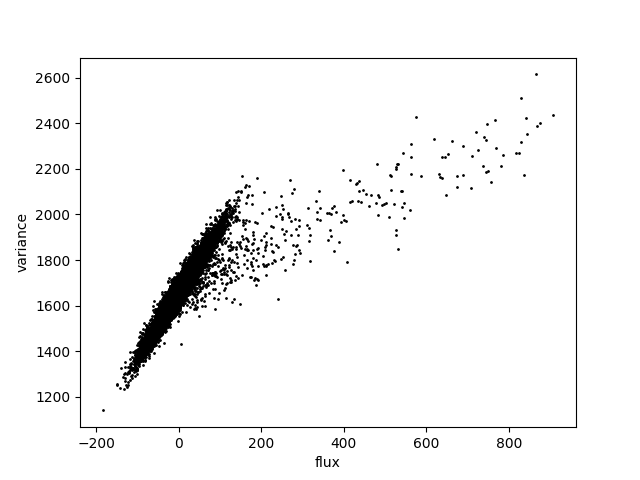

It’s not really following the trend anymore. Obviously I have injected a pretty bright object in the cutout, but still I would have expected it to follow the same trend.

In case it’s relevant:

- This is a u-band image. I haven’t checked others but I can

- I injected a point source, just using the default PSF model for the visit image, at the appropriate position etc.

- The objectID where I’m doing the injecting is: 592914119179383718

- I’m using the injection task

from lsst.source.injection import VisitInjectConfig, VisitInjectTask - The original and injected images aren’t perfectly aligned, they will be offset by 2 arcsec since the inject one is centered on my injected point source. Still, my injected point source is by far the brightest in the cutout so the high flux values are definitely injected ones