Admittedly, I’m using an old pipeline version (v19_0_0), but I still thought this topic and the associated “edge case” DECam examples might be worth bringing up irrespective of the exact pipeline version.

I have been running some test samples of hundreds of random DECaLS exposures through processCcd, to obtain detrended images and photometric/astrometric calibrations.

Typically, I find that the per-CCD run time is ~30 seconds and peak memory usage is ~4 GB. However, I noticed a small number of CCDs (a few out of ~15k) for which the run-time is much longer (~10-20+ minutes per CCD) and the memory usage is much higher (up to 23+ GB per CCD).

The first two such examples I looked at were:

EXPNUM = 430396, CCDNUM = 59 (N28), FILTER = z

EXPNUM = 521050, CCDNUM = 37 (N6), FILTER = z

Both of these cases have a bright (V ~ 4 mag) star right near the corner of the CCD. The logs show that the “time sink” is happening in the deblending stage:

INFO 2022-06-20T04:53:31.024-0700 processCcd.calibrate.deblend ({‘date’: ‘2015-04-08’, ‘filter’: ‘z’, ‘visit’: 430396, ‘hdu’: 59, ‘ccdnum’: 59, ‘object’: ‘DECaLS_13111_z’})(deblend.py:270)- Deblending 1271 sources

INFO 2022-06-20T05:16:36.196-0700 processCcd.calibrate.deblend ({‘date’: ‘2015-04-08’, ‘filter’: ‘z’, ‘visit’: 430396, ‘hdu’: 59, ‘ccdnum’: 59, ‘object’: ‘DECaLS_13111_z’})(deblend.py:417)- Deblended: of 1271 sources, 149 were deblended, creating 7910 children, total 9181 sources

INFO 2022-06-20T09:48:48.697-0700 processCcd.calibrate.deblend ({‘date’: ‘2016-02-28’, ‘filter’: ‘z’, ‘visit’: 521050, ‘hdu’: 39, ‘ccdnum’: 37, ‘object’: ‘DECaLS_31914_z’})(deblend.py:270)- Deblending 375 sources

INFO 2022-06-20T09:56:03.031-0700 processCcd.calibrate.deblend ({‘date’: ‘2016-02-28’, ‘filter’: ‘z’, ‘visit’: 521050, ‘hdu’: 39, ‘ccdnum’: 37, ‘object’: ‘DECaLS_31914_z’})(deblend.py:417)- Deblended: of 375 sources, 21 were deblended, creating 3982 children, total 4357 sources

Here’s what the (EXPNUM = 430396, CCDNUM = 59) “src” catalog looks like, with each red dot being one row in that catalog:

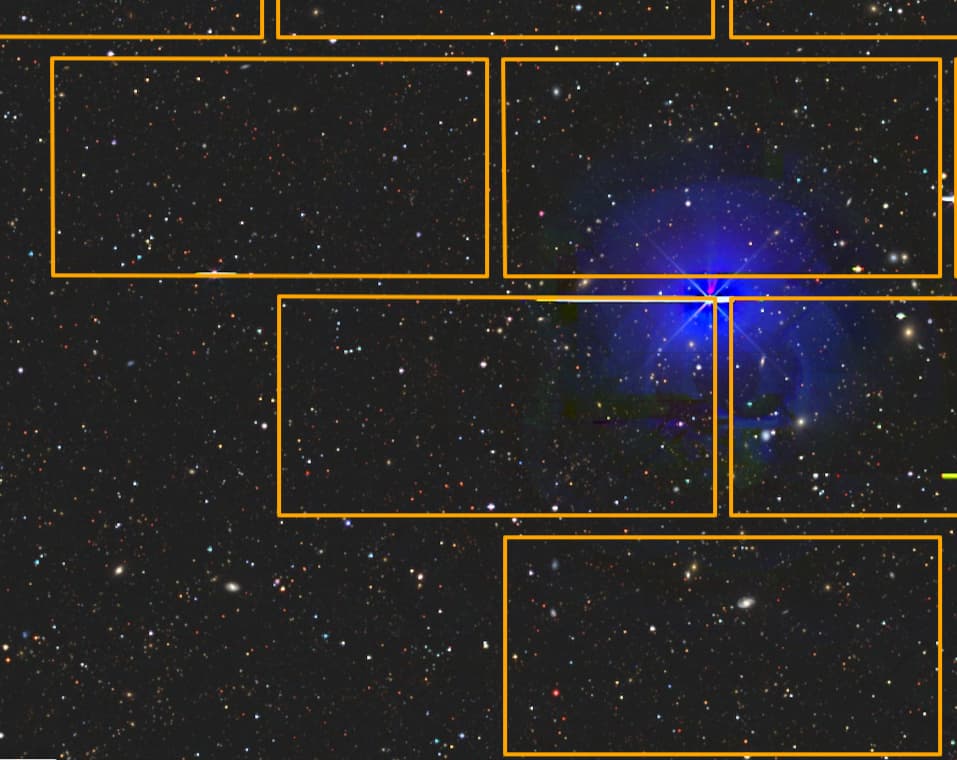

And for reference here’s what the DECam footprint overlaid on the DECaLS sky viewer looks like:

So it seems that we have a case where the wings of a bright star with centroid very near or slightly off the CCD edge are being deblended into large numbers of spurious sources.

One other odd aspect of this that I’m finding: if I run, say (EXPNUM = 430396, CCDNUM = 59), followed by some other “typical” CCD (by specifying a list of dataId’s to processCcd and using just one serial Python process), the memory usage spikes during deblending of the bright star wings, and then remains high at ~23+ GB, even throughout processing of the second unrelated CCD in the list, as if there’s a “memory leak” or failure to deallocate the large amount of memory used during deblending of the bright star’s wings.

I guess that I just wanted to report this and see if it’s something that’s known and/or being worked on and/or already fixed in more recent pipeline versions (I am hoping to transition all of my DECam-related LSST pipeline processing to Gen3 in the very near future). I suppose a related topic is whether there are some config options I could use to avoid these long run-time, high memory scenarios.

I see that there may be significant overlap between this post and Extreme memory usage during source deblending / measurement, so I will consult that as well, though I don’t believe that post touches on the possible memory leak.

Thanks very much!