Hi,

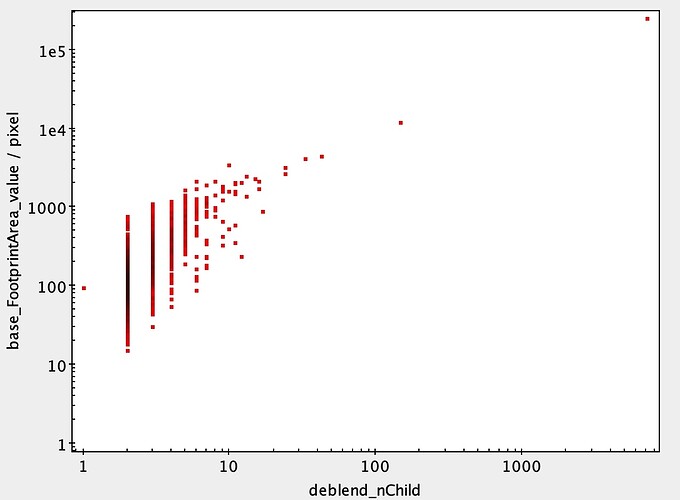

I have recently encountered very high memory usage while running processCcdTask, which becomes a serious problem for the calibrate.deblend and calibrate.measurement subtasks. The memory usage seems to consistently scale with the number of sources. In short, a final catalogue of ~20,000 sources (20 megapixel image) requires around 16.6 GB of RAM.

This is surprisingly high and makes it impossible to process more than a few images at once. Can anyone suggest what is going wrong here?

The logs look like this, where memory usage peaked at the end of calibrate.deblend:

characterizeImage.detection INFO: Detected 11499 positive peaks in 6883 footprints and 8 negative peaks in 3 footprints to 5 sigma

characterizeImage.detection INFO: Resubtracting the background after object detection

characterizeImage.measurement INFO: Measuring 6883 sources (6883 parents, 0 children)

characterizeImage.measurePsf INFO: Measuring PSF

characterizeImage.measurePsf INFO: PSF star selector found 406 candidates

characterizeImage.measurePsf.reserve INFO: Reserved 0/406 sources

characterizeImage.measurePsf INFO: Sending 406 candidates to PSF determiner

characterizeImage.measurePsf.psfDeterminer WARNING: NOT scaling kernelSize by stellar quadrupole moment, but using absolute value

characterizeImage.measurePsf INFO: PSF determination using 391/406 stars.

characterizeImage INFO: iter 2; PSF sigma=2.48, dimensions=(41, 41); median background=547.78

characterizeImage.measurement INFO: Measuring 6883 sources (6883 parents, 0 children)

characterizeImage.measureApCorr INFO: Measuring aperture corrections for 2 flux fields

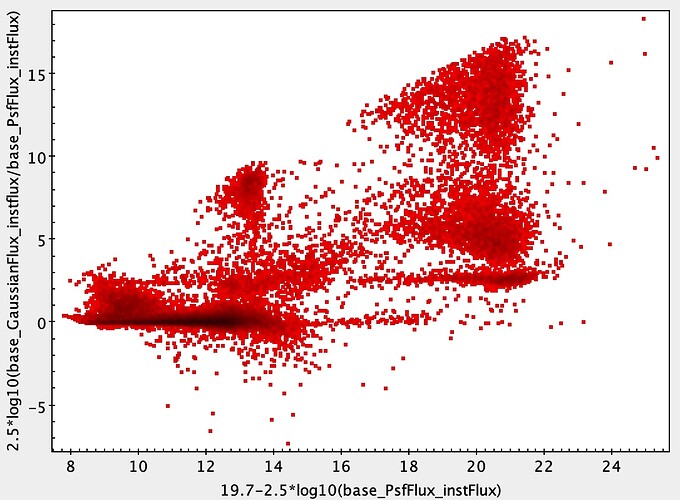

characterizeImage.measureApCorr INFO: Aperture correction for base_GaussianFlux: RMS 0.417713 from 327

characterizeImage.measureApCorr INFO: Aperture correction for base_PsfFlux: RMS 0.286895 from 342

characterizeImage.applyApCorr INFO: Applying aperture corrections to 2 instFlux fields

ctrl.mpexec.singleQuantumExecutor INFO: Execution of task 'characterizeImage' on quantum {instrument: 'Huntsman', detector: 9, visit: 210304183232676, ...} took 68.255 seconds

ctrl.mpexec.mpGraphExecutor INFO: Executed 2 quanta, 1 remain out of total 3 quanta.

calibrate.detection INFO: Detected 11287 positive peaks in 6252 footprints and 8 negative peaks in 2 footprints to 5 sigma

calibrate.detection INFO: Resubtracting the background after object detection

calibrate.skySources INFO: Added 100 of 100 requested sky sources (100%)

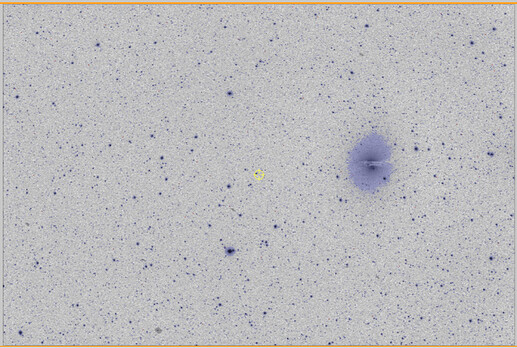

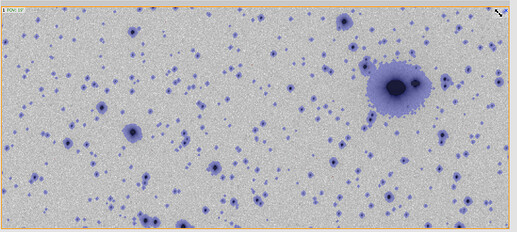

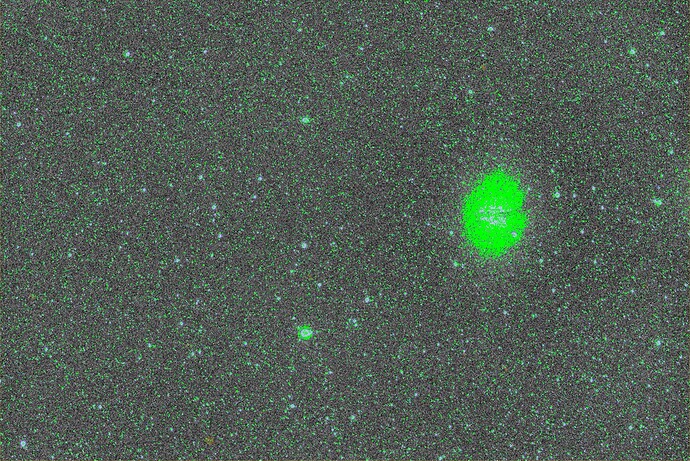

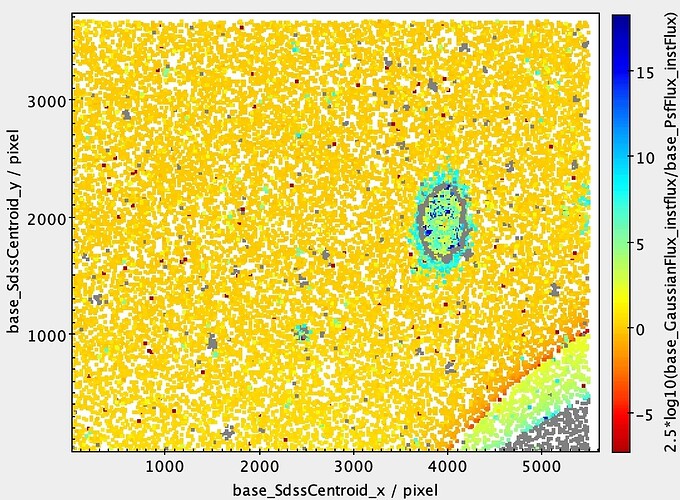

calibrate.deblend INFO: Deblending 6352 sources

meas_deblender.baseline WARNING: Skipping peak at (1495.0, 926.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (1516.0, 919.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (1512.0, 923.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (2453.0, 1474.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (2481.0, 1453.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (2478.0, 1458.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (2466.0, 1462.0): no unmasked pixels nearby

meas_deblender.baseline WARNING: Skipping peak at (2453.0, 1442.0): no unmasked pixels nearby

calibrate.deblend INFO: Deblended: of 6352 sources, 1846 were deblended, creating 6873 children, total 13225 sources

calibrate.measurement INFO: Measuring 13225 sources (6352 parents, 6873 children)

calibrate.applyApCorr INFO: Applying aperture corrections to 2 instFlux fields

calibrate INFO: Copying flags from icSourceCat to sourceCat for 6130 sources

calibrate.photoCal.match.sourceSelection INFO: Selected 1322/13225 sources

calibrate INFO: Loading reference objects from region bounded by [199.65715831, 202.90882873], [-44.13322063, -41.75304918] RA Dec

calibrate INFO: Loaded 1348 reference objects

calibrate.photoCal.match.referenceSelection INFO: Selected 1348/1348 references

calibrate.photoCal.match INFO: Matched 59 from 1322/13225 input and 1348/1348 reference sources

calibrate.photoCal.reserve INFO: Reserved 0/59 sources

calibrate.photoCal INFO: Not applying color terms because config.applyColorTerms is None and data is not available and photoRefCat is provided

calibrate.photoCal INFO: Magnitude zero point: 25.837880 +/- 0.001690 from 57 stars

I note that I am using Gen3 middleware and the latest weekly docker build. I have not found a similar Topic on the Community Forum.