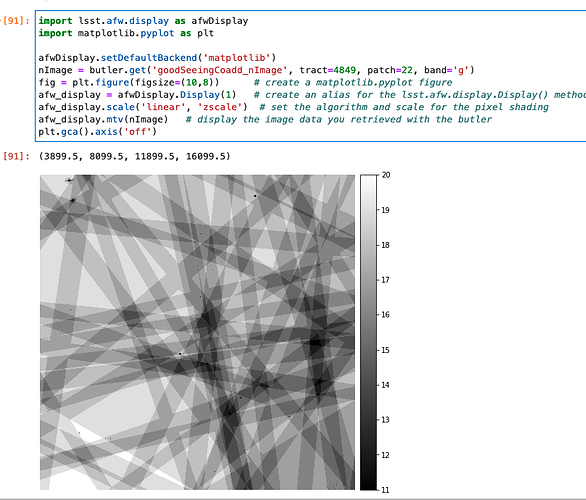

Also, I think one way to get at the input visits that went into a given template is from the logs. I’m not sure the following are the correct datasets for your purposes, but, e.g.

from lsst.daf.butler import Butler

config="dp02"

collection = "2.2i/runs/DP0.2"

skyMap = "DC2"

tract = 4849

patch = 22

butler = Butler(config=config, collections=collection, skymap=skyMap)

templateGen_log = butler.get("templateGen_log", band="g", tract=tract, patch=patch)

inputVisitList = []

for logLine in templateGen_log:

it "Weight of deepCoadd_psfMatchedWarp" in logLine.message:

print(logLine.message)

msg = logLine.message

visit = msg[msg.find("visit")+7:]

visit = visit[:visit.find(",")]

inputVisitList.append(visit)

print("inputVisitList = ", inputVisitList)

gives

Weight of deepCoadd_psfMatchedWarp {instrument: 'LSSTCam-imSim', skymap: 'DC2', tract: 4849, patch: 22, visit: 193784, ...} = 1692.563

Weight of deepCoadd_psfMatchedWarp {instrument: 'LSSTCam-imSim', skymap: 'DC2', tract: 4849, patch: 22, visit: 193824, ...} = 2088.092

[...]

inputVisitList = ['193784', '193824', '254349', '254364', '399393', '419767', '449903', '449904', '449955', '680275', '680296', '697992', '703769', '719589', '921284', '921309', '1170053', '1206080', '1206127', '1225465', '1225497']

You may also need to dig into the makeWarp logs to see if individual detectors were “de-selected” while generating warps. You need to look for lines with “Removing or " D[d]e[-]selecting” in them (not sure that covers them all…) , e.g.

makeWarp_log = butler.get("makeWarp_log", band="g", tract=4849, patch=22, visit=193784)

for logLine in makeWarp_log:

if "Removing" in logLine.message or "eselect" in logLine.message or "e-select" in logLine.message:

print(logLine.message)

tells you:

Removing visit 193784 detector 136 because median e residual too large: 0.004584 vs 0.004500