Hi @eastonhonaker , thanks for posting.

Based on the error message, it looks like the service thinks the user table ut1 does not exist. As I understand it, ut1 needs to be an astropy table and to exist in memory. To help with the diagnosis of this error, we need the code snippet that shows how ut1 is defined.

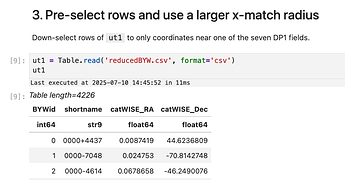

And here’s a bit of extra info that might help. In the examples in this related topic, ut1 is generated from SDSS.query_region and is an astropy table. In the example in this DP0.3 tutorial notebook, ut1 is read in from a file that has column names in the first row with no # symbol at the start of the row.

from astropy.table import Table

fnm1 = 'data/dp03_06_user_table_1.cat'

ut1 = Table.read(fnm1, format='ascii.basic')

Let us know if that solves the issue, and/or post the code snippet where the table is defined if the error persists?